I was trying to understand conditional entropy better. The part that was confusing me exactly was the difference between $H[Y|X=x]$ vs $H[Y|X]$.

$E[Y|X=x]$ makes some sense to me intuitively because its just the average unpredictability (i.e. information) of a random variable Y given that event x has happened (though not sure if there is more to it).

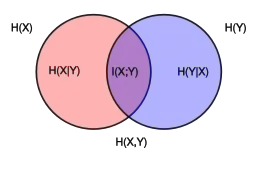

I do know that the definition of $H[Y|X]$ is:

$$H[Y|X] = \sum p(x) H(Y|X =x) $$

But I was having trouble interpreting it and more importantly, understanding the exact difference between $H[Y|X=x]$ vs $H[Y|X]$.