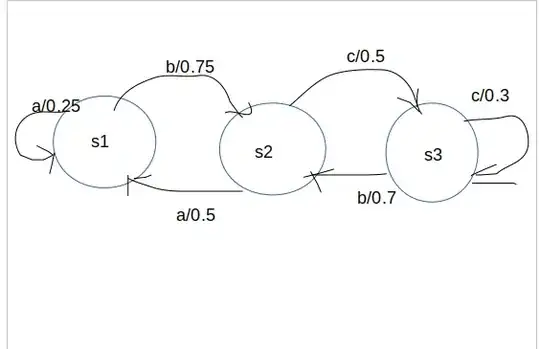

There is an information source on the information source alphabet $A = \{a, b, c\}$ represented by the state transition diagram below

The $i$-th output from this information source is represented by random variable $X_i$. It is known that the user is now in state $s_1$. In this state, let $H (X_i \mid s_1)$ denote the entropy when observing the next symbol $X_i$, find the value of $H (X_i \mid s_1)$, entropy of this information source, Calculate $H (X_i\mid X_{i-1}) $ and $H (X_i)$ respectively. Assume $i$ is quite large.

How can I find $H(X_i|s1)?$ I know that $H(X_i\mid s_1) = -\sum_{i} p\left(x_i, s_1\right)\cdot\log_b\!\left(p\left(x_i|s_1\right)\right) = -\sum_{i} p\left(x_i, s_1\right)\cdot\log_b\!\left(\frac{p\left(x_i, s_1\right)}{p\left(s_1\right)}\right)$ but I don't know $p(s_1).$

$A=\begin{pmatrix}0.25 & 0.75 & 0\\0.5 & 0 & 0.5 \\0 & 0.7 & 0.3 \end{pmatrix}.$

from matrix I can know that $p(s_1|s_1)=0.25$, etc

but what is the probability of $s_1$? and how can I calculate $H (X_i|X_{i-1})$? is this stationary distribution too?