I am developing a Python application wherein some snippets, it requires a lot of CPU for calculations.

However, I realize that even at these points, the CPU never reaches more than 50% use.

Of course, the program slows down, while it has CPU "to spare" that could make it faster.

For example, the process below takes (in my PC), 15 seconds:

from math import *

import time

ini = time.time()

for x in range(10**8):

a = cos(x)

print ("Total Time: ", time.time() - ini)

But during processing, only a few logical processors are used, and only 1 processor suffers more demand, yet it does not reach 100%.

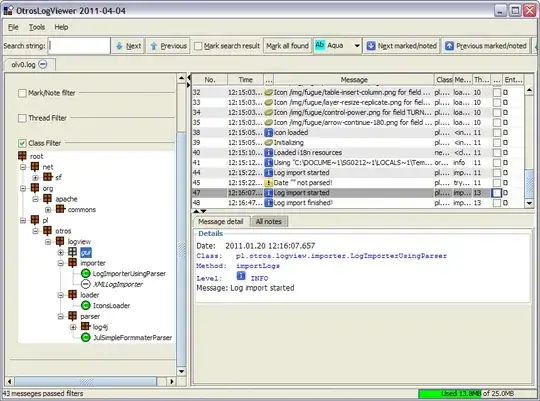

This is my CPU before running the code above:

And while running the code:

How to make Python use 100% CPU in critical processes?