hmm why AI I think that might be much more work to code an learn AI to do something like that than write it on your own...

I usually use different approach. If the stuff I need to code gets hideous then I simply write a a small program that will create the source code for me. The same goes for data tables etc. For example this was coded by C++ on PC but runs on AVR32:

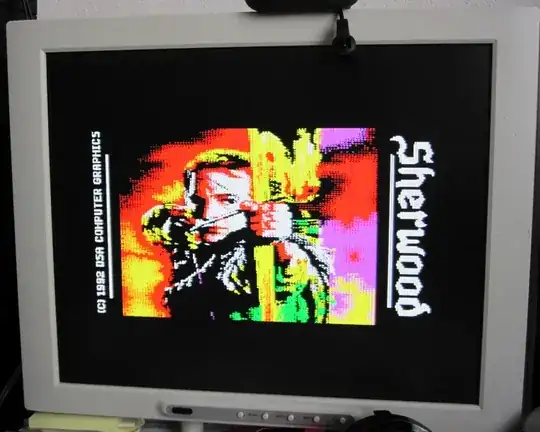

The MCU simply generate VGA image signal by using SDRAM interface and DMA the circuit consist just from MCU few resistors capacitors and diodes stabilizator and crystal. The image is hard-coded as C++ source generated by the script I mentioned before (some of you can recognize it as a loading screen from one ZX game).

Another example of this approach is auto generated code/templates I use for GLSL code running on CPU (also coded on CPU). Here vec2 template example:

template <class T> class _vec2

{

public:

T dat[2];

_vec2(T _x,T _y) { x=_x; y=_y; }

_vec2() { for (int i=0;i<2;i++) dat[i]=0; }

_vec2(const _vec2& a) { *this=a; }

~_vec2() {}

// 1D

T get_x() { return dat[0]; } void set_x(T q) { dat[0]=q; }

T get_y() { return dat[1]; } void set_y(T q) { dat[1]=q; }

__declspec( property (get=get_x, put=set_x) ) T x;

__declspec( property (get=get_y, put=set_y) ) T y;

__declspec( property (get=get_x, put=set_x) ) T r;

__declspec( property (get=get_y, put=set_y) ) T g;

__declspec( property (get=get_x, put=set_x) ) T s;

__declspec( property (get=get_y, put=set_y) ) T t;

// 2D

_vec2<T> get_xy() { return _vec2<T>(x,y); } void set_xy(_vec2<T> q) { x=q.x; y=q.y; }

_vec2<T> get_yx() { return _vec2<T>(y,x); } void set_yx(_vec2<T> q) { y=q.x; x=q.y; }

__declspec( property (get=get_xy, put=set_xy) ) _vec2<T> xy;

__declspec( property (get=get_xy, put=set_xy) ) _vec2<T> xg;

__declspec( property (get=get_xy, put=set_xy) ) _vec2<T> xt;

__declspec( property (get=get_yx, put=set_yx) ) _vec2<T> yx;

__declspec( property (get=get_yx, put=set_yx) ) _vec2<T> yr;

__declspec( property (get=get_yx, put=set_yx) ) _vec2<T> ys;

__declspec( property (get=get_xy, put=set_xy) ) _vec2<T> ry;

__declspec( property (get=get_xy, put=set_xy) ) _vec2<T> rg;

__declspec( property (get=get_xy, put=set_xy) ) _vec2<T> rt;

__declspec( property (get=get_yx, put=set_yx) ) _vec2<T> gx;

__declspec( property (get=get_yx, put=set_yx) ) _vec2<T> gr;

__declspec( property (get=get_yx, put=set_yx) ) _vec2<T> gs;

__declspec( property (get=get_xy, put=set_xy) ) _vec2<T> sy;

__declspec( property (get=get_xy, put=set_xy) ) _vec2<T> sg;

__declspec( property (get=get_xy, put=set_xy) ) _vec2<T> st;

__declspec( property (get=get_yx, put=set_yx) ) _vec2<T> tx;

__declspec( property (get=get_yx, put=set_yx) ) _vec2<T> tr;

__declspec( property (get=get_yx, put=set_yx) ) _vec2<T> ts;

// operators

_vec2* operator = (const _vec2 &a) { for (int i=0;i<2;i++) dat[i]=a.dat[i]; return this; } // =a

T& operator [](const int i) { return dat[i]; } // a[i]

_vec2<T> operator + () { return *this; } // +a

_vec2<T> operator - () { _vec2<T> q; for (int i=0;i<2;i++) q.dat[i]= -dat[i]; return q; } // -a

_vec2<T> operator ++ () { for (int i=0;i<2;i++) dat[i]++; return *this; } // ++a

_vec2<T> operator -- () { for (int i=0;i<2;i++) dat[i]--; return *this; } // --a

_vec2<T> operator ++ (int) { _vec2<T> q=*this; for (int i=0;i<2;i++) dat[i]++; return q; } // a++

_vec2<T> operator -- (int) { _vec2<T> q=*this; for (int i=0;i<2;i++) dat[i]--; return q; } // a--

_vec2<T> operator + (_vec2<T>&v){ _vec2<T> q; for (int i=0;i<2;i++) q.dat[i]= dat[i]+v.dat[i]; return q; } // a+b

_vec2<T> operator - (_vec2<T>&v){ _vec2<T> q; for (int i=0;i<2;i++) q.dat[i]= dat[i]-v.dat[i]; return q; } // a-b

_vec2<T> operator * (_vec2<T>&v){ _vec2<T> q; for (int i=0;i<2;i++) q.dat[i]= dat[i]*v.dat[i]; return q; } // a*b

_vec2<T> operator / (_vec2<T>&v){ _vec2<T> q; for (int i=0;i<2;i++) q.dat[i]=divide(dat[i],v.dat[i]); return q; } // a/b

_vec2<T> operator + (const T &c){ _vec2<T> q; for (int i=0;i<2;i++) q.dat[i]=dat[i]+c; return q; } // a+c

_vec2<T> operator - (const T &c){ _vec2<T> q; for (int i=0;i<2;i++) q.dat[i]=dat[i]-c; return q; } // a-c

_vec2<T> operator * (const T &c){ _vec2<T> q; for (int i=0;i<2;i++) q.dat[i]=dat[i]*c; return q; } // a*c

_vec2<T> operator / (const T &c){ _vec2<T> q; for (int i=0;i<2;i++) q.dat[i]=divide(dat[i],c); return q; } // a/c

_vec2<T> operator +=(_vec2<T>&v){ this[0]=this[0]+v; return *this; };

_vec2<T> operator -=(_vec2<T>&v){ this[0]=this[0]-v; return *this; };

_vec2<T> operator *=(_vec2<T>&v){ this[0]=this[0]*v; return *this; };

_vec2<T> operator /=(_vec2<T>&v){ this[0]=this[0]/v; return *this; };

_vec2<T> operator +=(const T &c){ this[0]=this[0]+c; return *this; };

_vec2<T> operator -=(const T &c){ this[0]=this[0]-c; return *this; };

_vec2<T> operator *=(const T &c){ this[0]=this[0]*c; return *this; };

_vec2<T> operator /=(const T &c){ this[0]=this[0]/c; return *this; };

// members

int length() { return 2; } // dimensions

};

As you can see the getters setters tend to be hideous to code so (this is just 2D now imagine 4D) the full code for vec is around 228 KByte of source. Here the script that I generated it with:

void _vec_generate(AnsiString &txt,const int n) // generate _vec(n)<T> get/set source code n>=2

{

int i,j,k,l;

int i3,j3,k3,l3;

const int n3=12;

const char x[n3]="xyzwrgbastpq";

txt+="template <class T> class _vec"+AnsiString(n)+"\r\n";

txt+="\t{\r\n";

for (;;)

{

if (n<1) break;

txt+="\t// 1D\r\n";

for (i=0;i<n;i++) txt+=AnsiString().sprintf("\tT get_%c() { return dat[%i]; } void set_%c(T q) { dat[%i]=q; }\r\n",x[i],i,x[i],i);

for (j=0;j<12;j+=4) for (i=0;i<n;i++) txt+=AnsiString().sprintf("\t__declspec( property (get=get_%c, put=set_%c) ) T %c;\r\n",x[i],x[i],x[i+j]);

if (n<2) break;

txt+="\t// 2D\r\n";

for (i=0;i<n;i++)

for (j=0;j<n;j++) if (i!=j)

{

txt+=AnsiString().sprintf("\t_vec2<T> get_%c%c() { return _vec2<T>(%c,%c); } ",x[i],x[j],x[i],x[j]);

txt+=AnsiString().sprintf("void set_%c%c(_vec2<T> q) { %c=q.%c; %c=q.%c; }\r\n",x[i],x[j],x[i],x[0],x[j],x[1]);

}

for (i=i3=0;i3<n3;i3++,i=i3&3) if (i<n)

for (j=j3=0;j3<n3;j3++,j=j3&3) if (j<n) if (i!=j)

{

txt+=AnsiString().sprintf("\t__declspec( property (get=get_%c%c, put=set_%c%c) ) _vec2<T> %c%c;\r\n",x[i],x[j],x[i],x[j],x[i3],x[j3]);

}

if (n<3) break;

txt+="\t// 3D\r\n";

for (i=0;i<n;i++)

for (j=0;j<n;j++) if (i!=j)

for (k=0;k<n;k++) if ((i!=k)&&(j!=k))

{

txt+=AnsiString().sprintf("\t_vec3<T> get_%c%c%c() { return _vec3<T>(%c,%c,%c); } ",x[i],x[j],x[k],x[i],x[j],x[k]);

txt+=AnsiString().sprintf("void set_%c%c%c(_vec3<T> q) { %c=q.%c; %c=q.%c; %c=q.%c; }\r\n",x[i],x[j],x[k],x[i],x[0],x[j],x[1],x[k],x[2]);

}

for (i=i3=0;i3<n3;i3++,i=i3&3) if (i<n)

for (j=j3=0;j3<n3;j3++,j=j3&3) if (j<n) if (i!=j)

for (k=k3=0;k3<n3;k3++,k=k3&3) if (k<n) if ((i!=k)&&(j!=k))

{

txt+=AnsiString().sprintf("\t__declspec( property (get=get_%c%c%c, put=set_%c%c%c) ) _vec3<T> %c%c%c;\r\n",x[i],x[j],x[k],x[i],x[j],x[k],x[i3],x[j3],x[k3]);

}

if (n<4) break;

txt+="\t// 4D\r\n";

for (i=0;i<n;i++)

for (j=0;j<n;j++) if (i!=j)

for (k=0;k<n;k++) if ((i!=k)&&(j!=k))

for (l=0;l<n;l++) if ((i!=l)&&(j!=l)&&(k!=l))

{

txt+=AnsiString().sprintf("\t_vec4<T> get_%c%c%c%c() { return _vec4<T>(%c,%c,%c,%c); } ",x[i],x[j],x[k],x[l],x[i],x[j],x[k],x[l]);

txt+=AnsiString().sprintf("void set_%c%c%c%c(_vec4<T> q) { %c=q.%c; %c=q.%c; %c=q.%c; %c=q.%c; }\r\n",x[i],x[j],x[k],x[l],x[i],x[0],x[j],x[1],x[k],x[2],x[l],x[3]);

}

for (i=i3=0;i3<n3;i3++,i=i3&3) if (i<n)

for (j=j3=0;j3<n3;j3++,j=j3&3) if (j<n) if (i!=j)

for (k=k3=0;k3<n3;k3++,k=k3&3) if (k<n) if ((i!=k)&&(j!=k))

for (l=l3=0;l3<n3;l3++,l=l3&3) if (l<n) if ((i!=l)&&(j!=l)&&(k!=l))

{

txt+=AnsiString().sprintf("\t__declspec( property (get=get_%c%c%c%c, put=set_%c%c%c%c) ) _vec4<T> %c%c%c%c;\r\n",x[i],x[j],x[k],x[l],x[i],x[j],x[k],x[l],x[i3],x[j3],x[k3],x[l3]);

}

break;

}

txt+="\t};\r\n";

}

Half of the core of my Z80 CPU emulator code is auto-generated this way and is fully configurable from MySQL database dumped to text file.

In demo scene was usual to code some effects on better computer or in advance and encode the result so it can be "played" instead of generated in RT on target platform.

So as you can see there are more easier ways than AI to overcome brick-wall ...