I've been shooting quite a few gigs at clubs/concerts and repeatedly experience issues with scenes lit by small bandwidth lights (for example lasers).

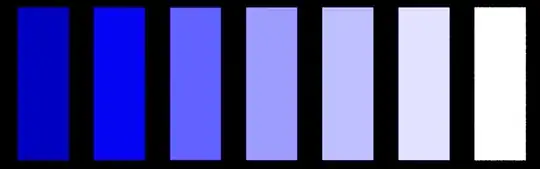

These lights cause a single channel to oversaturate, yielding weird artifacts. From theory, I'd expect a very strong light to bleed into the other channels, leveling off at white. Here's a simulation of what I expect, rendered in Filmic Blender:

Every rectangle emits

Every rectangle emits RGB(0,0,1) with luminosity increasing from left to right.

Instead it seems like the camera just clips off the blue at maximum value. If at all, it'll switch instantly from blue to white. Here's one example:

and there are some more on Imgur.

In order to avoid these problems, I usually coordinate with the organizers/lighting to make sure there is always tungsten lighting available on stage. This requirement limits my freedom a lot and I'd like to avoid it.

Is there some image processing, for example mapping RGB(0,0,1) to white that'd help? Is this just a hardware limitation of all the cameras I've used until now (various Canon DSLRs and the Sony a7 lineup)? These images were recorded in the Adobe RGB color space, is this a limitation?