Digital imaging sensors in the vast majority of cameras used for creative photography do not measure color information. They measure a brightness value of the light that strikes each pixel.

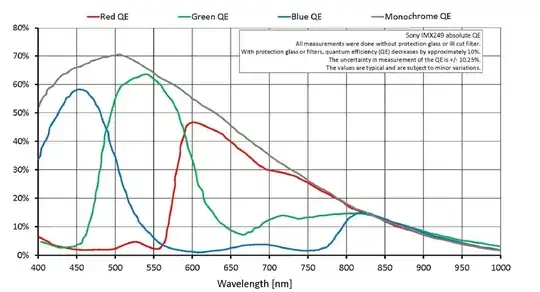

It is true that for a Bayer masked image sensor the pixels have a red, a green, or a blue filter in front of them. But each pixel still measures light of all wavelengths that make it through the filter and strikes that pixel. Each pixel measures all photons that strike it, regardless of wavelength, indiscriminately.

The green filter allows more light in wavelengths centered on green to pass through than it allows light in wavelengths closer to blue or red to pass through. But a good bit of red and some blue light will make it through the green filter and be included in the total brightness value counted by that "green" pixel.

The red filter allows more light in wavelengths centered on red to pass through than it allows light closer to green or blue wavelengths to pass through. But a good bit of green and a little bit of blue light will make it through the red filter and be included in the total brightness value counted by that "red" pixel.

The blue filter allows more light in wavelengths centered on blue to pass through than it allows light closer to green or red wavelengths to pass through. But a good bit of green and even a little bit of red light will make it through and be included in the total brightness value counted by that "blue" pixel.

Color response curves of a typical CMOS image sensor:

One way to understand this is to remember what happens when we put a red filter in front of the lens when using black & white film. The resulting image is still black and white, but the colors in the scene that are closer to red are as bright a shade of grey in an image with a red filter as they are in an image taken without the red filter. Not so with colors in the scene that are closer to green or blue. The green items are a darker shade of grey than they were in the unfiltered image and the blue items are a LOT darker shade of grey than they were in the unfiltered image.

But the green and blue items are still visible in the red filtered image at reduced brightness values.

That is how the filters over each pixel on a Bayer masked sensor work - some of all wavelengths of light pass through each one. Only when the amount of light allowed through for adjacent pixels filtered for red, green, and blue are compared can color information be derived from the information collected by the monochromatic sensor. This is the process we refer to as demosaicing or as debayerization.

The reason this works so well is because Bayer masked filters emulate the way the human retina works: We have three sizes of cones in our retinas that are sensitive to various wavelengths of light at different attenuations. But each sized cone is sensitive, to one degree or another, of overlapping wavelengths of visible light. Only when our brain compares the differences between the brightness of each set of cones does it create the colors that we perceive.

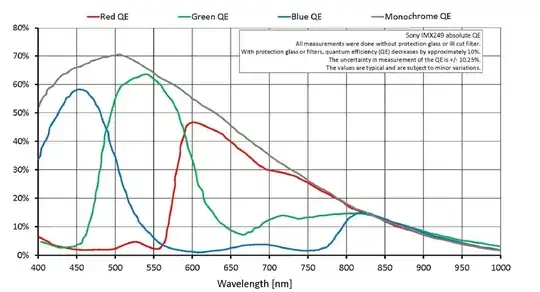

Color response of the three sizes of cones in the human retina:

Notice how closely the response of our "red" and "green" cones overlap! But the difference is enough for our brains to differentiate various colors based on the comparative difference between the signal it receives from each set of cones.

To control color balance in digital camera exposures it would potentially be useful to be able to change the sensitivity of the sensor on a per channel basis, or alternatively to be able to apply an "exposure compensation" on a per channel basis (where Red, Green, and Blue are the three channels). Is there any camera that supports this ability?

Pretty much every single color camera with a Bayer masked filter (or the external application used to decode the raw data later outside the camera) applies exposure compensation on a per channel basis. That is what we call "White Balance." But for cameras intended for the purpose of creative photography, which is the application for which this group is concerned, they all do it after the signal from the sensor is converted to digital information.

Why? Because to do it by differing amounts to each set of pixels filtered by the three different filter colors before analog-to-digital conversion without reducing the dynamic range and noise performance of the pixels from each set by differing amounts would require altering the exact colors of each set of the filters in the Bayer mask. If we do it electronically to the analog voltages output by each sensel (pixel well), we would introduce a different amount of 'dark current' noise to the value of each set of sensels filtered with each color and we would reduce the dynamic range of the sensor in a way that could lead to banding in areas of color gradation within the image. By amplifying the signal from each sensel equally (remember, at this point each sensel only has a specific brightness value with no color information whatsoever), we are able to exploit the maximum dynamic range and minimize the read noise created by every pixel on the sensor for a specific ISO setting. These disadvantages may be acceptable for other forms of imaging, such as machine vision and other technical applications. For creative photography, the disadvantages seem to outweigh the benefits.