Is there a tool that can help you do such thing? Can photoshop do it? Matlab can, but I don't want to use it.

-

2Why do you think the frequency spectrum reflects the quality of the image? The amplitude histogram is often used as an indication of a well-exposed image, but that's a completely different thing. – Philip Kendall Mar 28 '16 at 20:30

-

1See also / possible duplicate: What parameters can I use to evaluate a “Perceptual Image Quality”? – Philip Kendall Mar 28 '16 at 20:32

-

1The frequency spectrum could be used as a measure of sharpness. This isn't generally a measure of quality as a perfectly sharp (all over) image is only desirable is certain situations. However for very similar images it may be a valid comparison (e.g. if you're at the limits of what you can hold steady and hold the trigger down). – Chris H Mar 28 '16 at 20:49

-

2What exactly does Matlab do that you want to replicate? – mattdm Mar 28 '16 at 21:15

-

Please be a little more specific about what you define as "quality." – Michael C Mar 29 '16 at 01:04

-

Compress both with fixed quality JPEG (like, quality 85%) and then compare the size of the files. Bigger = better – FarO Mar 29 '16 at 08:36

-

1Thanks for everyone's comment. I didn't know how to ask this question correctly. Now I know. By quality I just meant sharpness. And Chris helped explain the reason. Thanks! : ) – Ethan Mar 29 '16 at 14:01

4 Answers

I assume you have 2 equal images in files, you don't have a reference highest quality image and you want to keep the better one of the two.

Based on the previous answer, I suggest a brute-force, but quickly get-to-work approach:

- Resize to the bigger size & re-compress both images with exactly same quality preset, grayscale conversion, using JPEG or better algorithm.

- Compare the file sizes.

- Erase the files and keep the better picture (coresponding to the bigger file).

Explanation: Often the image compression preset is different for the different files so the initial sizes cannot speak for the quality. That's why we re-compress them with the same preset and software. We resize to equal size so the compressor decisions are made based on the detail level of the 2 images. Not always the bigger one has the higher detail.

When this will fail: if one of the images is with applied noise filter - some photographers add noise when retoutching their photos to increase their "ART" value. The noise is hardly compressible and is perceived by the JPEG codec/compressor as higher level of detail, so will yield a bigger file.

How to do this in Debian/Ubuntu Linux (I'm using it):

install image magic if you don't have it:

sudo apt-get install imagemagick

source code of my bash script called better_img.bash:

#!/bin/bash img1=$1 img2=$2W1=

identify -format "%w" "$img1"# take width H1=identify -format "%h" "$img1"# take h W2=identify -format "%w" "$img2"# take width H2=identify -format "%h" "$img2"# take hif [ "$W1" -ge "$W2" ]; then W=$W1; H=$H1; else W=$W2; H=$H2; fi TD="$W"x"$H"

convert $img1 -colorspace Gray -resize "$TD" -quality 75 /tmp/cmp_out1.jpg convert $img2 -colorspace Gray -resize "$TD" -quality 75 /tmp/cmp_out2.jpg

S1=

ls /tmp/cmp_out1.jpg -l | cut -d ' ' -f 5# get filesize S2=ls /tmp/cmp_out2.jpg -l | cut -d ' ' -f 5# get filesizeif [ "$S1" -ge "$S2" ]; then echo $img1; else echo $img2; fi

rm /tmp/cmp_out1.jpg; rm /tmp/cmp_out2.jpg

It takes the filenames as parameters and prints out the better file name

- 111

- 3

it will show the frequency spectrum? of the picture which tells you its quality.

Sounds like you're thinking of the histogram, which shows the distribution of pixels according to either their brightness or their red, green, and blue values. It's not a measure of quality beyond the fact that it can help you evaluate exposure. But you can easily take a photo that has a nicely distributed range of values, but is completely out of focus, or badly composed, or just ugly.

Is there a tool that can help you do such thing?

If you just want the histogram, there are lots of tools that'll do that. Most photo management tools (Lightroom, Aperture, Photos, etc.) include a histogram feature.

One really easy way to get a histogram is to install the ImageMagick tools and use the convert command, like:

convert somefilename.jpg histogram:histogramFileName.gif

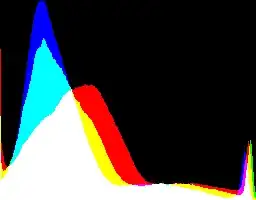

And then you get something like this:

Like everything related to ImageMagick, there are lots of options to customize the output and get exactly what you want. For example, you can get the pixel counts for every unique color in the image. Or you can separate the image into red, blue, and green components and generate separate brightness histograms for each.

- 31,682

- 6

- 65

- 120

Your question is very confussing. But I have used a method of analizing an histogram but for comparing different compression loss.

Use google translate please: http://otake.com.mx/Apuntes/PruebasDeCompresion/1-CompresionJpgProceso.htm

The basic idea is that you overlap the exact image with different compression settings on the original image using a diference overlay mode.

After that, you amplify the resulting image and compare the histograms.

- 24,760

- 1

- 43

- 81

-

I am interested but the link is broken. Can you name the algorithm or point to other documentation page? – IceCold Aug 03 '19 at 16:10

-

https://web.archive.org/web/20160409224503/http://otake.com.mx/Apuntes/PruebasDeCompresion2/1-CompresionJpgProceso.htm – IceCold Aug 03 '19 at 16:21

-

Thanks. I Updated the link. I do not know why I broke it on the first place. – Rafael Aug 03 '19 at 16:35

A really lazy heuristic, assuming that the images have been taken with the same process and similar framing and are available as JPEG, is to take the image with the larger file size. Basically this means that the lossy JPEG compression algorithm was of the opinion that more human-discernible details required preserving which usually means more in-focus details. Of course, whether those details are the ones of your actually interesting subject is another question, but teaching "interesting subject" to an algorithm would likely be hopeless anyway.