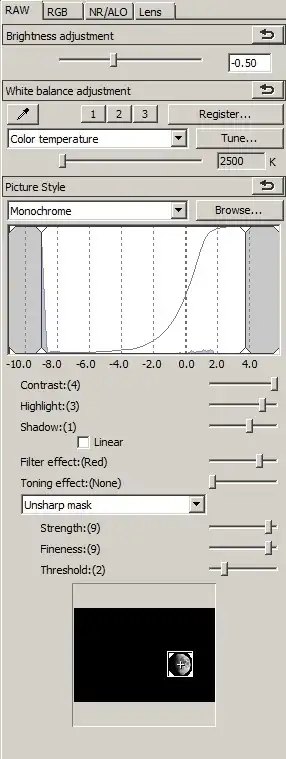

You need demosaic algorithm even if you convert an image to B&W.

A reason for that is quite simple - otherwise you'd get sub-pixel artifacts all over the place. You need to realize that image recorded by sensor is quite messy. Let's take a look at the sample from Wikipedia:

Now imagine we don't do any demosaicing and just convert RAW into grayscale:

Well... you see the black holes? Red pixels didn't register anything in the background.

Now, let's compare that with demosaiced image converted to the gray scale (on a left):

You basically lose detail, but also lose a lot of artifacts that make the image rather unbearable. Image bypassing demosaicing also loses a lot of contrast, because of how the B&W conversion is performed. Finally the shades of colours that are in-between primary colors might be represented in rather unexpected ways, while large surfaces of red and blue will be in 3/4 blank.

I know that it's a simplification, and you might aim into creating an algorithm that's simply: more efficient in RAW conversion to B&W, but my point is that:

You need computed colour image to generate correct shades of gray in B&W photograph.

The good way to do B&W photography is by removing colour filter array completely - like Leica did in Monochrom - not by changing the RAW conversion. Otherwise you either get artifacts, or false shades of gray, or drop in resolution or all of these.

Add to this a fact that RAW->Bayer->B&W conversion gives you by far more options to enhance and edit image, and you got pretty much excellent solution that only can be overthrown by dedicated sensor construction. That's why you don't see dedicated B&W RAW converters that wouldn't fall back into demosaicing somewhere in the process.