Starting point of this question is, for a hyperspectral camera in a satellite, where one is interested in macro level details to see crop health, water pollution issues etc. Is it better to go for lower GSD or higher GSD?

Now there are so many factors for a design, so my question simply is what is good, bigger pixel or smaller pixel, when one is only interested in bigger pixel worth GSD, considering all factors to be same.

My claim is that data averaged over smaller pixel will be worse than one bigger pixel, below is my reasoning. Am I correct? Or should I consider other factors too?

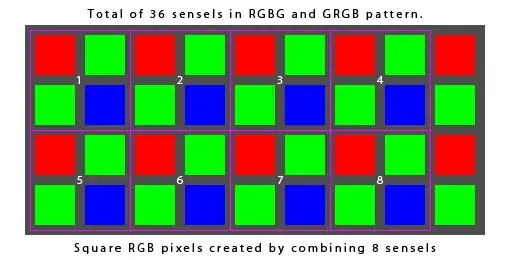

For simplicity, assume a CCD with pixel width = a, and another CCD with N pixels with width = a / N.

The quantity of interest is the averaged value of light over the width a. Now is it better to collect light over N smaller pixels and then average the value, or just have one large pixel to get the average directly?

My Analysis:

- Let Energy received in width a be

E - Energy received per smaller pixel =

E/N - I am not sure the workings of CCD, but assuming it has something to do with a capacitor I assume that value assigned to a pixel is proportional to the voltage, and voltage is proportional to

sqrt(Energy Received)

If we assume gaussian noise, with variance=s in measured quantity, that is voltage. Also, since the energy is being distributed across N pixels, this will cause the "resolution" per voltage to be smaller in each smaller pixel, which will cause noise of N^2 * s in the value given to a pixel, and then averaging over N samples will make noise as N * s.

Thus, the noise of averaging the smaller pixel is higher than getting directly average value in a bigger pixel. Of course, I am ignoring the constant factors here, and just working with proportionality. I guess there indeed will be a break-even point considering other engineering factors.

But if I assume noise in the energy collected, then the noise variance will come out to be same in the end.