What are some examples of mathematical conjectures that applied mathematicians assume to be true in applications, despite it being unknown whether or not they are true?

-

1What precisely do you mean by "being unknown whether or not they are true"? – Somos Jan 18 '18 at 22:58

-

4@Somos As in, we do not have a valid proof or disproof of the conjecture. – Christopher King Jan 18 '18 at 22:59

-

22Do physicists qualify as "applied mathematicians"? There are hundreds of unproved conjectures that physicists believe. – Alexandre Eremenko Jan 19 '18 at 03:34

-

@AlexandreEremenko Eh, probably close enough, as you don't make hundreds of answers. – Christopher King Jan 19 '18 at 03:37

-

1This is tangential to the question, but perhaps might be of interest: "When were mathematical conjectures first formulated and communicated?." – Joseph O'Rourke Jan 22 '18 at 00:37

9 Answers

An important specific conjecture is that you cannot factor large integers fast. Many security systems for Internet and other transactions, depend on this.

- 88,753

-

8Afaik, all (public key) cryptography algorithms boil down to some number theoretic conjecture. Not necessarily factoring large numbers, but something has to be assumed to be difficult. – Dustan Levenstein Jan 18 '18 at 23:36

-

4I'm not an expert, but I think this is not true for Merkel hash-based schemes: "the European Commission has recommended use of Merkle signature scheme for long term security protection against quantum computers." – Joseph O'Rourke Jan 19 '18 at 00:11

-

15

-

5Isn't a Merkle tree just as secure as its underlying hash function? – darij grinberg Jan 19 '18 at 01:05

-

How likely is it that this would be proven false, but those systems still being secure? – Christopher King Jan 19 '18 at 03:44

-

-

2Even if P!=NP, factoring isn't known to be NP-hard, so crypto based on it could still be vulnerable even if we can't tackle NP problems in polynomial time. – Gray Taylor Jan 20 '18 at 22:51

-

@JosephO'Rourke: The application where factoring difficulty matters is not related to hashing. It is related to mainly two things: 1. secretly passing encryption keys without a listener being able to know what either party is talking about (Diffie-Hellman key exchange) and 2. asymmetric encryption where you can expose one of your encryption keys to the public (the public key) yet nobody can crack the encrypted message without your secret private key – slebetman Jan 21 '18 at 16:13

-

2@PyRulez an efficient algorithm for factoring gives an (essentially) equally efficient algorithm for breaking RSA and Diffie Hellman key exchange. The only technicality that can salvage these systems is that the efficient algorithm may be efficient only in theory, but in practice be very slow for all inputs of less than astronomical size. – Sasho Nikolov Jan 21 '18 at 20:00

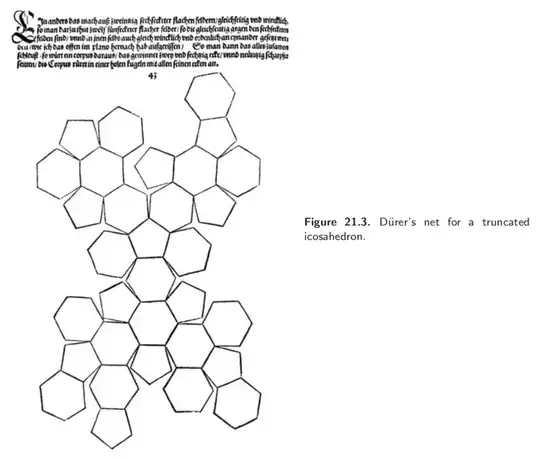

It is an open problem to resolve a question formalized by G. Shephard in 1975:

Q. Can the surface of every convex polyhedron be cut along edges and unfolded flat to one non-self-overlapping polygon in the plane?

It is usually called Dürer's problem because there is a sense in which it goes back to Dürer. But I am not sure it is fair to call it a "conjecture," one reason this is not an ideal answer to the posed query.

It is also a bit of a stretch to claim there is a direct application. But I did find a Ph.D. thesis in mechanical engineering that lamented, "there is no theorem or efficient algorithm that can tell if a given 3D shape is unfoldable [without overlap] or not." Despite Dürer's problem being unresolved, any engineer or architect who wants to unfold a 3D shape—say, to punch it out of aluminum—breaks it into convex pieces (one piece if already convex), and easily edge-unfolds the pieces without overlap. The lack of a resolution to the open problem is no impediment to actually finding edge-unfoldings in practice.

Fig. from Geometric Folding Algorithms, p.299: Soccer ball unfolding.

J.O'Rourke, "Dürer's problem." In Marjorie Senechal, editor, Shaping Space: Exploring Polyhedra in Nature, Art, and the Geometrical Imagination, pages 77--86. Springer, 2013. (Springer link.)

- 149,182

- 34

- 342

- 933

-

@Somos: You are quoting PyRulez, not me. I think what s/he means is that it is unknown whether or not the conjecture is true or false, or the open problem is resolved positively or negatively. The latter is the situation for Dürer's problem. – Joseph O'Rourke Jan 18 '18 at 22:55

-

2

-

26

-

Some partial results are in this paper: http://erikdemaine.org/papers/Ununfoldable/paper.pdf – Timothy Chow Jan 19 '18 at 16:59

-

6The paper Timothy cites proves that a particular non-convex polyhedron (a "spikey" tetrahedron) has no edge-unfolding that leads to a non-overlapping polygon in the plane. So it establishes that if the conjecture is true, it requires convexity. – Joseph O'Rourke Jan 22 '18 at 00:30

The use of RSA for public-key encryption is widely believed to rely on the assumption that factoring is hard. Actually it relies on a stronger assumption than this, namely that the RSA problem is hard.

The RSA problem is the following: given a semiprime $N = pq$ and an exponent $e$ such that $\gcd(e, \varphi(N)) = 1$, efficiently compute $e^{th}$ roots $\bmod N$. It is widely believed that the only way to do this is to compute $e^{-1} \bmod \varphi(N)$ where $\varphi(N) = N - p - q + 1$, and in turn it is widely believed that the only way to do this is to factor $N$ so as to compute $\varphi(N)$. However, strictly speaking both of these are conjectures which are independent of the conjecture that factoring is hard.

So it may be that factoring is hard but that the RSA problem is easy because there is some clever way to avoid these steps and solve the RSA problem without factoring $N$. Note also that we only need to factor semiprimes; it may also be that factoring is hard but that factoring semiprimes is easy.

- 114,941

-

6The math in RSA works not just for products of two distinct primes, but for any product of distinct primes (a single prime would lead to a really silly cryptosystem). So if factoring integers that are products of two primes winds up being easy without making factoring of squarefree integers with $k$ prime factors for some $k > 2$ also easy, then RSA could be saved by using $k$ primes instead of 2 primes. Admittedly, all known guidelines for avoiding easily cracked public keys in RSA would need to be adapted to products of $k$ primes. Someone should start working on that so we're ready. – KConrad Jan 19 '18 at 01:23

-

-

I know I'm being pedantic again but RSA is not "widely believed" to rely on this assumption, it is trivially proved to be broken if you have a fast factorization algorithm because then the private exponent d is easily calculated from the public key (n,e) using the exact same steps that the original issuer used, namely the extended Euclid's algorithm can be used to find d as the multiplicative inverse of e modulo φ(n) = φ(p)φ(q) = (p-1)(q-1), which is basically what you wrote, too. So, my point is that "reliance" is transitive here. – Arne Vogel Oct 19 '22 at 09:54

The Miller-Rabin primality test works very well in practice as a probabilistic algorithm for finding "practical" (not provable) primes in cryptography, but the algorithm would become an efficient polynomial-time deterministic algorithm if the generalized Riemann hypothesis is true for all Dirichlet $L$-functions (well, maybe just for even characters is enough). In practice I don't think anybody in cryptography cares about GRH being true or not in order to be content using this primality test precisely because of the probabilistic version of it that does not depend on GRH, so this example might not strictly be an answer to the original question, but I think it is a good approximation to an answer.

- 49,546

-

8In particular, the probabilistic test is as good as the deterministic for applications, since with enough tests you can make it so the probability of error is the same as the probability of the deterministic test giving the wrong answer due to hardware errors. – Christopher King Jan 19 '18 at 01:33

-

-

-

2Relatedly, we could prove better running times for the original version of the AKS primality test if some conjecture about the distribution of Sophie Germain primes holds. I'm not sure if later versions of AKS "depend" on that conjecture. – David Richerby Jan 19 '18 at 14:21

-

@Kimball In practice the Miller test is significantly slower than APR-CL and ECPP, and the latter don't require the GRH. This is not a complete surprise as ECPP and the Miller test are both $O(\log^{(4+\epsilon)}(n))$. Other than simplicity and theory there isn't much reason for us to pine for this. Later versions of AKS do not rely on Sophie-Germain primes -- both Pomerance/Lenstra and the much faster (non-randomized) Bernstein variants do not rely on this. The original and updated versions all run in empirical $O(\log^{(6+\epsilon)}(n))$ time (very different constants though). – DanaJ Jan 19 '18 at 22:00

Although we have a proof now, I suspect grocers had intuitively stacked oranges in the most efficient way prior to the proof of Kepler's conjecture. I don't know for sure if any grocer was an applied mathematician.

https://www.newscientist.com/article/dn26041-proof-confirmed-of-400-year-old-fruit-stacking-problem/

- 4,608

- 2,143

-

21Most likely they were aware of Gauss' partial result for lattices... – James Smith Feb 02 '18 at 10:28

-

I love a problem I can relate to. (I finally get why everyone seems so interested in sphere packing :) – DukeZhou May 16 '18 at 03:45

Navier Stokes equations are believed to be well-posed.

- 2,261

-

7It would be best to cite the specific conjecture (instead of just stating it), and how it is used in applications. – Christopher King Jan 18 '18 at 23:00

-

8Is this even true (as stated)? My impression is that many people believe that the N-S equations are not necessarily well-posed and that they might blow up in finite time, but not for 'real-world' data. – Steven Stadnicki Jan 18 '18 at 23:45

-

7@StevenStadnicki Your point is exactly what i meant. The "applied mathematicians" believe that NS are well-posed for conditions that occur in applications. – Piyush Grover Jan 19 '18 at 01:13

-

1The conjecture is that the strong (regular) solutions of the Navier Stokes equations are globally well posed if the initial data have finite energy. Jean Leray constructed (1934) global weak solutions. For the appropriate formulation see an article by C.Fefferman on the milennium problem EXISTENCE AND SMOOTHNESS OF THE NAVIER–STOKES EQUATION. Although mathematicians seem to be more excited on the NS, more interesting and physically reliable are Euler equations of incompressible fluids.. – Artur Jan 19 '18 at 09:06

-

4I wonder if what people really believe in or rely on is something like, CFD simulations are a reasonable approximation to reality. Even if it turns out, mathematically, that NS has some pathological properties, it could turn out that nature doesn't quite obey NS after all. Nature could obey some well-behaved equations that are close to, but not exactly the same as, NS, and maybe CFD simulations are doing a good job of approximating the real equations. – Timothy Chow Jan 19 '18 at 15:55

-

I think NS is a good way to understand the underlying mathematical difficulties. We are yet not really able to handle real non-linearity. NS with small data or short time is more or less a perturbation of the linear case. Hence, I think it doesn't matter that much which (sufficiently difficult) system one investigates. – Sebastian Bechtel Jan 20 '18 at 22:11

-

As far as Euler equations go, however, while they admit an easier calculation of a solution in some cases, those solutions can come with utterly nonphysical behavior, including admitting an infinite speed of sound for incompressible fluids and infinitely thin shocks (i.e., singularities/discontinuities) without the diffusive terms added by viscosity and thermal conductivity for inviscid, nonconductive fluids. These can create stability problems in a large number of numerical approximation approaches when solving real-world problems using these equations. – Tristan Jan 23 '18 at 16:23

-

There are definitely phenomena occurring in the real world that are outside the scope of Navier-Stokes. For instance, cavitation. Whether cavitation is ever linked to failure of Navier-Stokes is certainly an open question. If global existence for Navier-Stokes fails, there must be an infinite negative pressure. This might be suggestive, but nothing more. – Michael Renardy Nov 17 '21 at 03:38

I think the biggest example is $P \neq NP$. Security experts routinely assume this to be true when designing security algorithms. The correct functioning of millions of machines depend on this.

I think its also the biggest example because if someone proves it false, then, depending on the details of the proof, the applications literally stop performing their intended function (or not, depending on the details of the proof).

- 2,542

- 5,715

-

28I think you are confusing $P=NP$ with "we can efficiently solve all NP problems". – Carlo Beenakker Jan 18 '18 at 21:26

-

12I second Carlo's comment. Just knowing that P=NP doesn't let us (for example) factor numbers effectively, for a variety of reasons. Among others, 1. the proof might not be constructive, so it doesn't give us a relevant algorithm, 2. the polynomial algorithm might have horrible constant which make it unusable in practice (that happened with AKS primality test, which, while polynomial time, is to this day too slow to be remotely efficient). – Wojowu Jan 18 '18 at 21:29

-

9@CarloBeenakker That's why I said "depending on the details of the proof". – Christopher King Jan 18 '18 at 21:31

-

4Of course this is your question and your rules, but my understanding of how the question is posed is that all that should matter is the conjecture itself, not hypothetical means of its (dis)proofs. By themselves, P=NP and its negation have basically zero impact on security, for reasons in my other comment. – Wojowu Jan 19 '18 at 08:24

-

@Wojowu AKS can be used with numbers with thousands of decimal digits. sure, you are not going to find the biggest prime in this way but it can be 100% used for practical applications like generating RSA keys etc. So: I think you chose a bad example for a big constant algorithm... – Bakuriu Jan 19 '18 at 21:30

-

@Bakuriu I have never heard of using AKS for generating RSA primes, to my knowledge probabilistic algorithms are used. Do you have a reference for AKS being used? – Wojowu Jan 19 '18 at 21:35

-

@Wojowu I have implemented AKS and used it on numbers from hundreds to 10k digits without many issues. It runs in the order of seconds to some minutes so it is not impractical, just slower. If you need such numbers for real-time encrypted sockets it's too slow, but it's fine for creating certificates/ssh keys (it could even take an hour it would not make much difference in that case). – Bakuriu Jan 19 '18 at 22:02

-

5@Bakuriu, you keep saying this, but you have never shared your results. These numbers you give are wildly different than any other published results. It is stunningly easy to write an incorrect AKS implementation that looks like it works, but isn't actually the AKS test. – DanaJ Jan 19 '18 at 22:03

-

@Bakuriu I haven't implemented it myself, but other people did and they have researched the real-time running time. I have found this plot, which indicates that even for a 100-digit prime the AKS running time gets into hours. – Wojowu Jan 19 '18 at 22:10

-

1Using the AKS algorithm from the paper on a 1000 digit input, r ~ 11 million. This means you loop 11 million times, each one doing a modular exponentiation on a degree-11-million polynomial. With Bernstein's 4.1 variant there are fewer than 1 million loops, but we also have loads of upfront trial division and Fermat tests required. – DanaJ Jan 20 '18 at 00:02

-

@Wojowu most applied mathematicians do assume this though, which was sort of the point. It probably wouldn't immediatly break all security, but it would at least require cryptographers to redo and recheck all their algorithms (and rethink how to prove an algorithm secure). – Christopher King Jan 20 '18 at 00:02

-

What machine depends on $P \neq NP$ for its correct functioning? This sentence confuses me. The fact that these machines seem to work correctly would then prove the conjecture...? – Najib Idrissi Jan 21 '18 at 08:13

-

@NajibIdrissi for a security algorithm to work correctly, malicious agents mustn't have access to it. This might not be the case if $P=NP$. – Christopher King Jan 21 '18 at 15:28

-

The algorithm would "work" correctly, it would simply be easier to bypass... – Najib Idrissi Jan 21 '18 at 17:31

-

@NajibIdrissi part of correction for these algorithms is not only the correct result, but also correct security properties. – Christopher King Jan 21 '18 at 17:32

Many cryptographic protocols are based on the problem which seem to be hard. Among them Discrete logarithm problem and its variants (Diffie–Hellman problem, Decisional Diffie–Hellman assumption, Elliptic Curve Discrete Logarithm Problem), Quadratic residuosity problem, lattice problems (Short integer solution problem, Shortest basis problem,...),...

- 11,875

-

2In fact, essentially the only known encryption scheme that is unconditionally secure is the one-time pad. There are a few other cryptographic (but not encryption) primitives that don't rely on any unproven assumptions, such as Shamir's secret sharing and Carter-Wegman MACs (when used with OTP). But those are still few and far between. – Ilmari Karonen Jan 22 '18 at 16:09

Tonelli–Shanks algorithm needs quadratic nonresidue $n$ modulo prime $p$. If the extended Riemann hypothesis is true, then the first quadratic nonresidue $n_p$ is always less than $3(\log p)^2/2$ (Wedeniwski 2001) for $ p>3$. Under this assumption Tonelli–Shanks algorithm becomes polynomial. Probabilistic heuristics (presuming that each non-square integer has a 50-50 chance of being a quadratic residue) suggests that $n_p$ should have size $O( \log p )$. The best unconditional estimate (Burgess) is $n_p\ll p^\theta$ for any $\theta >1/4\sqrt e$.

See also extended discussion at The least quadratic nonresidue, and the square root barrier.

- 11,875

-

1

-

@GerryMyerson Good upper bound on $n_p$ means that in this application one can search for $n_p$ looking at $n=2,3,\ldots$ – Alexey Ustinov Feb 02 '18 at 07:53

-

1Sure, but why would an applied mathematician want to know the first quadratic nonresidue? – Gerry Myerson Feb 02 '18 at 07:58

-

-

2OK, but what would an applied mathematician do with Tonelli-Shanks? – Gerry Myerson Feb 02 '18 at 11:13

-

1@GerryMyerson It depends on the definition of "applied mathematician". – Alexey Ustinov Feb 02 '18 at 22:42