I found some help material and guided by it tried to implement PCA using SVD in MAtlab for image compression.

I did it in this way:

I = imread('1.jpg');

I2 = rgb2gray(I);

inImageD=double(I3);

[U,S,V]=svd(inImageD);

C = S;

C(5+1:end,:)=0;

C(:,5+1:end)=0;

D=U*C*V';

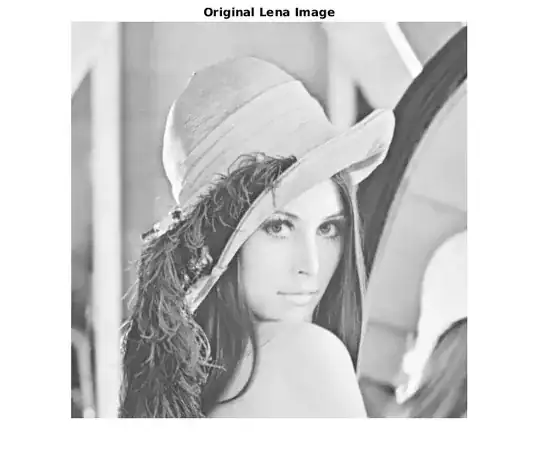

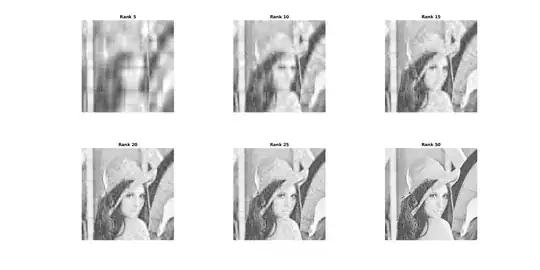

And now D contain a matrix that looks like my image but kindly smoothed, but this matrix has the same dimesion as my start image. I expected that PCA will reduce not only number of features but also dimension..

Obviosly I do not understand something.

Well - reduction here means that I can restore initial data with some precision from matrices that much less than my data?

But multiplication of these matrices gives me matrix with same dimension as my initial matrix.

I want to use reduced data for training a classifier. In this case what I shloud use dor training?

In a ML course on Coursera we learned to use PCA with SVD for dimensionality redcution:

Steps:

1) Suppose we have four images 50x50. We did form a matrix X 4x2500; First step is normalazing this matrix: X(:,j) = X(:,j) - Mean(X(:,j));

2) Calculate covariance matrix: Sigma = (1 / m) * X' * X;

3) [U, S, V] = svd(sigma);

4) Obtain reduced examples of images: Zi = U'reduce * X(1,:); where Ureduce is subset of matrix U. If we had an image with 2500 pixels then with this action we can obtain an image of, for example, 1600 pixels(any number, depends of how many columns we will leave in Ureduce from U).