I'm trying to follow a tutorial paper on generalizing Dirichlet Distribution Finite Mixture Models to Dirichlet Process Infinite Mixture Models;

- Li, Y., Schofield, E., & Gönen, M. (2019). A tutorial on Dirichlet process mixture modeling. Journal of Mathematical Psychology, 91, 128-144.

Some context to clarify my questions (at the end of this post) follows;

They start by deriving a Gibbs-sampling algorithm for the case of a Dirichlet distribution prior finite Gaussian mixture model (Section 2). In Section 3, they extend this to the infinite mixture case.

I get stuck following the derivation at Section 3.2: When $K$ approaches infinity. They start from

$$ p(c_1, \dots, c_k ~|~ \alpha) = \frac{\Gamma(\alpha)}{\Gamma(n + \alpha)} \prod_{k=1}^K \frac{\Gamma(n_k + \alpha / K)}{\Gamma(\alpha/K)} $$

where $c_i$ is a variable such that $c_i = k$ indicates the $i$th observation $y_i$ belongs to cluster $k$, $\alpha$ is the symmetric Dirichlet distribution concentration parameter, $n$ is the total number of observations, $n_k$ is the number of observations in cluster $k$, $K$ is the total number of clusters, and $\Gamma(\cdot)$ is the gamma function.

The derivations below then show that

$$ p(c_i = k ~|~\mathbf{c}_{-i}, \alpha) = \frac{n_{-i, k}+\alpha/K}{n-1+\alpha} $$

where $-i$ denotes all indicators except the $i$th, and "$n_{-i,k}$ represents the number of observations nested within the $k$th cluster excluding $y_i$".

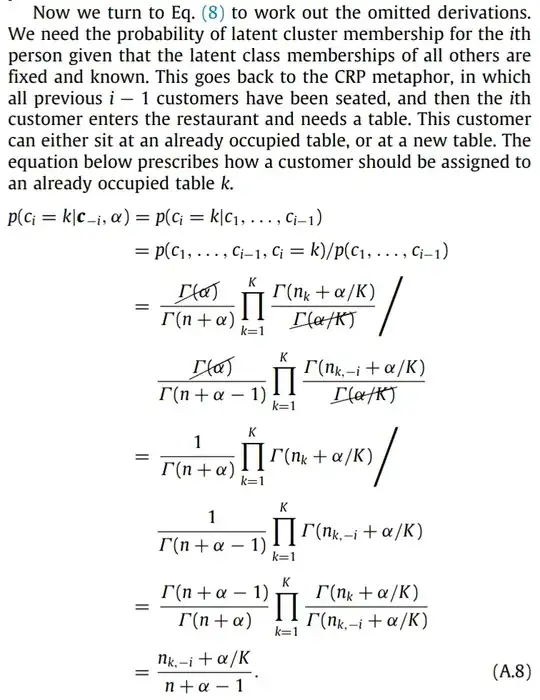

The derivation (from Appendix A.4) is included below;

My questions that I'm stuck on;

- What does "$n_{-i,k}$ represents the number of observations nested within the $k$th cluster excluding $y_i$" mean. Wouldn't this number just be equal to $n_k - 1$? Why the special notation?

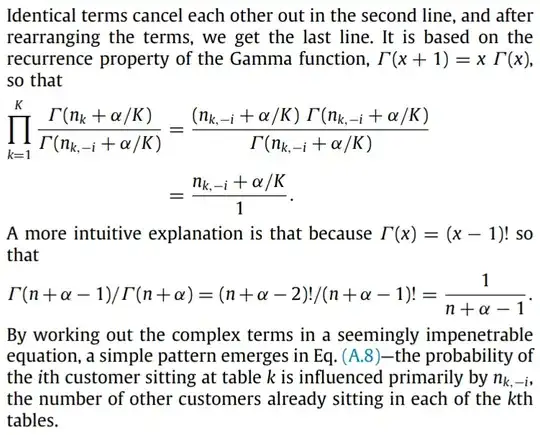

- I can follow the first 4 lines ($=$ signs) of the derivation, but get stuck at the jump from line 4 to line 5. For example, the equation just above the text "a more intuitive explanation..." - it seems that they have dropped the product operator. How did they acomplish this?