I read from the MGB stats textbook which says something about "the problem of moments", as follows: "In general, a sequence of moments μ1,μ2..,μn,... does not determine a unique distribution function;..., However, if the moment generating function of a random variable did exist, then this moment generating function did uniquely determine the corresponding distribution function "

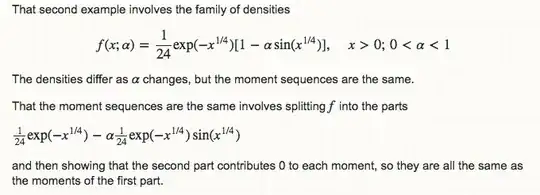

It is hard for me to see the difference between these two concepts(sequence of moments VS moment generating function). I've looked through several posts about this topic, and I know that someone did come up with a counterexample of a particular density family with the same sequence of moments:

I also read about the proof of the uniqueness of moment generating function.

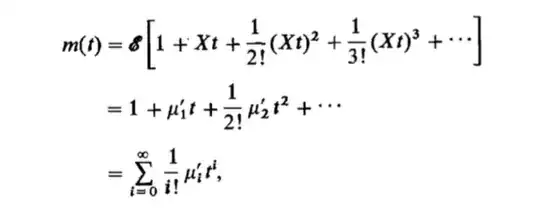

But doesn't that sequence of moments define a moment generating function? As m(t) can be written as

Can someone fill the gap for me? Thanks so much!