This question is just theoretical but it occurs to me that it can't be all that impossible.

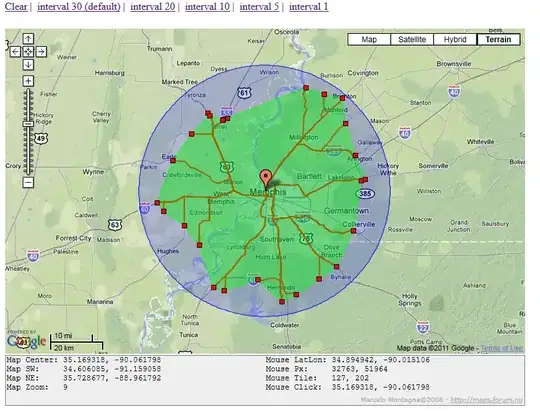

Seeing as how Google is just a big web spider that essentially starts out at one page, like index everything starting at such and such a page dealing with basketball, how difficult/impossible would it be to give a starting point (GPS) from a city and have it find all the roads/intersections in a 10-mile radius for example? Basically 'crawl' and keep track or road names, gps points, and intersections wherever it takes a fork.

The Google Maps API would probably come into big use there, the only speedbump might be the web requests max. I could use OpenStreetMap but I'm not sure it would be able to provide as much accessibility. I'd like to write as little XML parsing as possible.