The problem is that numerical differentiation is considered an ill-posed problem because a small change in the input parameters results in big changes at output. Using other schemes, such as centred differences, instead of progressive differences might give you slightly better results, but it does not solve the issue.

There are multiple ways to solve this, apart from the ones answered by other users. Let me show you some of them, ordered from simpler to more complex.

In all the examples I am using $f(x) = \cos^2(x) + \sin(x) + \epsilon (x)$ as the function that I want to take the derivative of (where $\epsilon (x)$ is a small error, $|\epsilon (x)| < 0.01$). The derivative should be $f'(x) = - 2 \cos(x) \sin(x) + cos(x)$, but I won´t get that result a priori because of the noise.

- Approximate the function to $g(x)$. Being $g(x)$ smoother. Polynomial fitting, for example. You can also use least-squares method to approximate the function to a non-polynomial.

For example, I will use, $g(x) = a_0 + a_1 sin(x) + a_2 cos(x) + a_3 sin(2x) + a_4 cos(2x)$. Here is the code in MATLAB:

A = [ones(size(x,2), 1), sin(x'), cos(x'), sin(2*x'), cos(2*x')];

A_sist = A'*A;

b_sist = A'*y_noised;

sol = A_sist\b_sist;

y_fitted = sol(1) + sol(2)sin(x) + sol(3)cos(x) + sol(4)sin(2x) + sol(5)cos(2x);

- Add a term that penalizes strong changes in the derivative.

Instead of computing the derivative at each point, $d_0, d_1, ..., d_{n-1}$ as

$d_k = \frac{f_{k+1}-f_k}{\Delta x}, \;\; x=0,...,n-1$

compute them such that the function

$F_1(d_0, ..., d_{n-1}) = \sum_{k=0}^{n-1}\left(d_k - \frac{f_{k+1}-f_k}{\Delta x}\right)^2 + \alpha \sum_{k=0}^{n-2}\left(\frac{d_{k+1}-d_k}{\Delta x}\right)^2 $

is minimized. $\alpha$ is a penalty term, that shouldn’t be too hight to avoid over-regularization. The minimum is obtained when $\delta F_1 / \delta d_0 = \delta F_1 / \delta d_1 = ... = \delta F_1 / \delta d_n = 0 $. If you take a piece of paper and a pencil you should be able to compute those derivatives and write it all as a linear system of equations. We obtain the following system:

$ A D = B $

Where $B = (b_0, b_1, ..., b_n)^T; \;\; b_k = (f_{k+1} - f_k)/\Delta x $, $D = (d_0, d_1, ..., d_{n-1})$, and $A = I + \frac{\alpha}{(\Delta x)^2} A_1$. $A_1$ is a tridiagonal matrix where the main diagonal is $(-1, 2, ..., 2, -1)$ and the two other diagonals have $-1$ everywhere.

The cool thing is that now A is a well-conditioned matrix, so you won´t get big variations in the solution when there are small variations in the function.

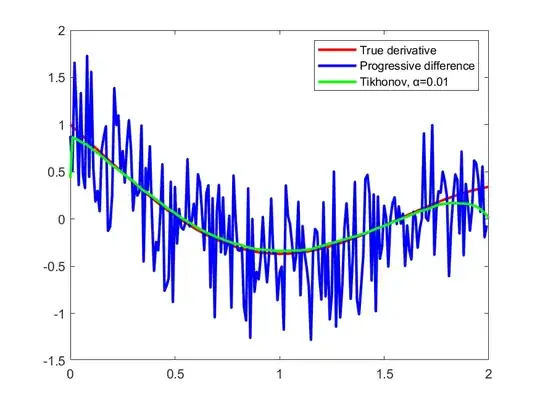

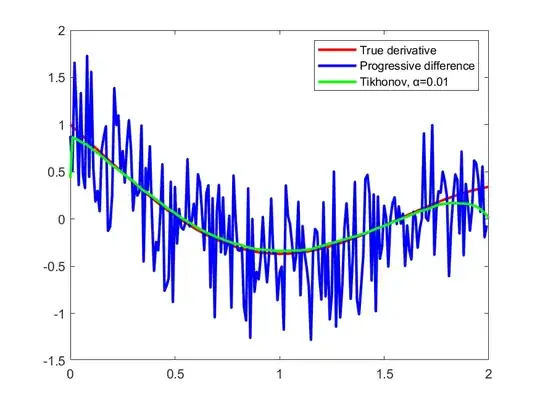

- Write the problem as a linear system and use Tikhonov or Landweber method to regularize it.

We are looking for $D(x) = f'(x)$. We know that if $f(x)$ is continuous,

$ \int_{x_0}^{x} D(t) dt = \int_{x_0}^{x} f'(t) dt = f(x) - f(x_0) $

Let $g(x) = f(x) - f(x_0)$. You can aproximate the integral $\int_{x_0}^{x_k} D(t) dt = g(x_k)$ using the trapezoidal rule:

$ \int_{x_0}^{x_k} D(t) dt \approx \frac{\Delta x}{2} \left( D(x_0) + 2 \sum_{j=1}^{k-1} D(x_j) + D(x_k) \right) $

If we call $d = (D(x_0), ..., D(x_{n-1})$ and $g = (g(x_0), ..., g(x_{n-1})$, then, we end up with a linear system of the form $A d = g$, that can be solved using some regularization algorithm.

I won´t show the entire procedure because it is quite long, but here are the results I got with Tikhonov:

And here are the results I got with Landweber:

I hope this helped.

diff()to be your choice. Basically this function subtracts two values. Check the documentation of Matlab. I'm assuming you are using Matlab though. – CroCo Jun 02 '14 at 01:00