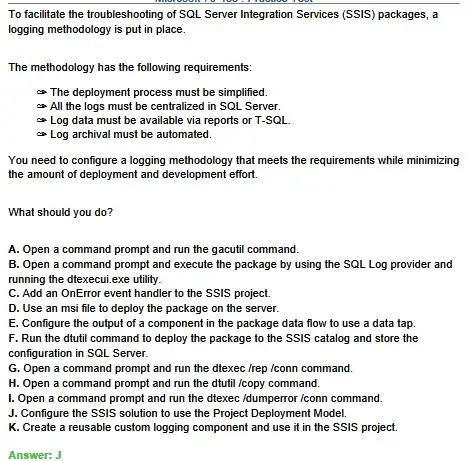

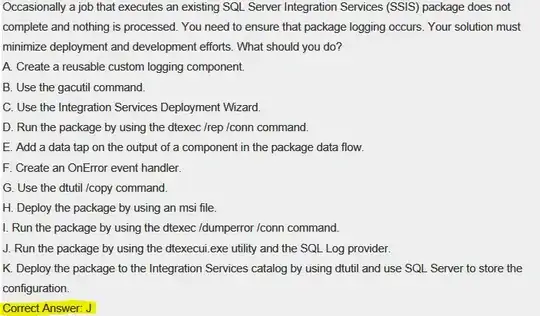

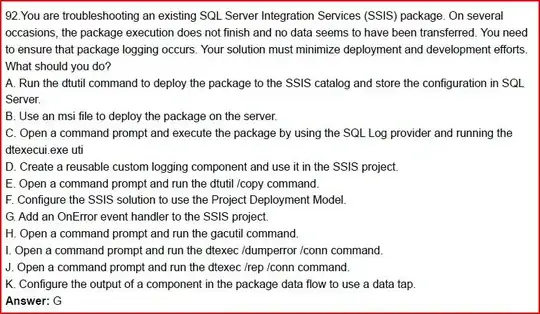

Root question

Why would using the Project Deployment Model provide the lowest cost of logging assuming the desire is to have

- Centralized

- automated

- SQL Server based

Answer

Because it's built in. The new project model automatically logs at the Basic level when executed on the server. For completeness, your available logging options are None, Basic, Performance, Verbose. Basic is going to have everything you need for a standard run. If it fails, you will know information about the failure - what failed and why. You'll have start and stop times for all your executables and as the first sentence mentions, you don't have to do a single thing to get it.

Prior to this, a new package is created, goes through all the SOX/SAS-70 deployment checks and what not and it blows up in production. Look at the logs to see what happened and oh snap, nobody turned logging on. The package must then be edited which requires running back through all the approval and testing process and generally is a PITA.

Finally, if you add on OnError event handler to the package great, but now what? You will need to write your own logic, maybe a stored proc, whatever, to do something with the error. That's probably not going to meet the automated requirement.

dtexecui is just a gui for dtexec which is used whether you're using the project deployment or the classic package deployment model. It's just a matter of whether you indicate a package lives inside an ispac/ssisdb or not.

Final thoughts

Study up on the Project Deployment Model. Really dig in and play through how all this works. The test is trying to ascertain whether you understand what comes part and parcel with it so you can deliver the most effective solutions.