The biggest concern is that nvarchar uses 2 bytes per character, whereas varchar uses 1. Thus, nvarchar(4000) uses the same amount of storage space as varchar(8000)*.

In addition to all of your character data needing twice as much storage space, this also means:

- You may have to use shorter

nvarchar columns to keep rows within the 8060 byte row limit/8000 byte character column limit.

- If you're using

nvarchar(max) columns, they will be pushed off-row sooner than varchar(max) would.

- You may have to use shorter

nvarchar columns to stay within the 900-byte index key limit (I don't know why you would want to use such a large index key, but you never know).

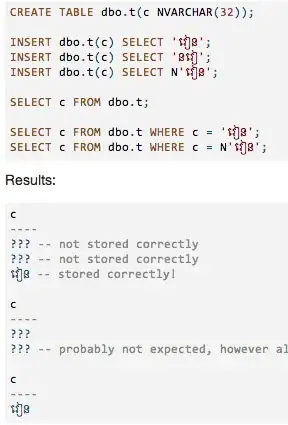

Besides that, working with nvarchar isn't much different, assuming your client software is built to handle Unicode. SQL Server will transparently upconvert a varchar to nvarchar, so you don't strictly need the N prefix for string literals unless you're using 2-byte (i.e. Unicode) characters in the literal. Be aware that casting nvarchar to varbinary yields different results than doing the same with varchar. The important point is that you won't have to immediately change every varchar literal to an nvarchar literal to keep the application working, which helps ease the process.

* If you use data compression (the lightweight row compression is enough, Enterprise Edition required before SQL Server 2016 SP1) you will usually find nchar and nvarchar take no more space than char and varchar, due to Unicode compression (using the SCSU algorithm).