During the migration of a 300 million rows table I have encountered a strange behaviour on our AWS Aurora database:

Around 25 minutes into the migration, the total IOPS of the database dropped to almost 0 and it seemed to be idling without waits and did not return - seemingly stuck in the operation.

How can this behaviour be explained? Is it related to Aurora and the read replicas? I do expect that the migration can run on Aurora as it did on another PostgreSQL RDS instance.

Quick facts:

- AWS Aurora PostgreSQL database, 3 replicas (same instance/class/size), 2 reader, multi AZ.

- Huge table: Migration affects ~100 million rows of ~330 million total rows.

- Migration was split into 3 chunks of 100 million rows.

- Dead tuples where cleaned up before migration with manual vacuum.

- Migration ran successfully on dev environment (db copy on PostgreSQL RDS instance) in about 70 minutes

Chunk 1 query in question:

-- Expected affected rows: 15154380

UPDATE debtclaim_event

SET acceptance_mode = 'NORMAL',

modifiedon = now(),

modifiedby = 'system#FC-7868',

optlock = optlock + 1

WHERE type = 'ACCEPTED'

AND id < 100000000;

related explain / analyse:

EXPLAIN ANALYSE SELECT * FROM debtclaim_event WHERE type = 'ACCEPTED' AND acceptance_mode IS NULL;

resulted in:

Seq Scan on debtclaim_event (cost=0.00..10236490.40 rows=33147254 width=550) (actual time=7.737..141019.913 rows=33358994 loops=1)

Filter: ((acceptance_mode IS NULL) AND (type = 'ACCEPTED'::text))

Rows Removed by Filter: 265944598

Planning Time: 0.096 ms

Execution Time: 142877.726 ms

Further steps

I tried to reduce the chunk size to 10 million. This resulted in the first chunk passing (which had no updates) and the second chunk passing with ~1,4 milltion rows altered in about 3 minutes.

The third chunk showed a similar behavoiur, as it dropped total iops after about 6 minutes and then idling for 15 more minutes, not returning.

Besides that I did not try to optimize the migration further. A possible way forward could be a new table creation, instead of updating the original one, as described in another post.

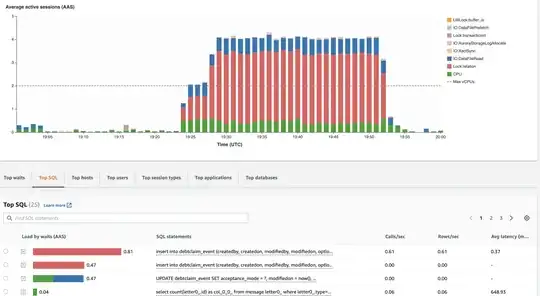

More insights from AWS RDS

During the operation other sessions tried to access and alter the table in question, but those mostly waited:

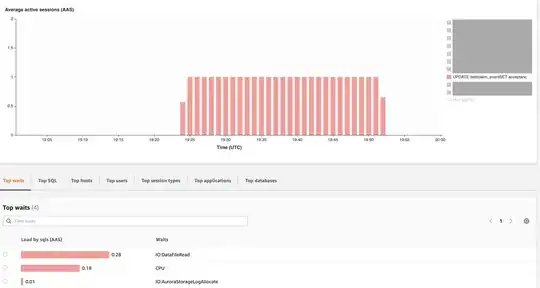

Here is another graph depicting the operation in further detail: