Assumptions

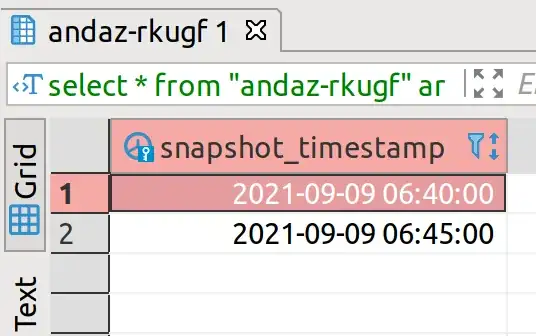

You did not declare, but the key symbol in the screenshot indicates that snapshot_timestamp is defined as PRIMARY KEY.

There cannot be concurrent writes to involved rows.

Else you need to do more.

The best query depends on details of your setup - most importantly data distribution.

For few rows per 10-minute interval

WITH keep AS (

SELECT min(snapshot_timestamp) AS snapshot_timestamp

FROM "andaz-rkugf"

WHERE snapshot_timestamp <= '2018-10-31+0' -- ①

GROUP BY extract(epoch FROM snapshot_timestamp)::bigint / 600 -- ②

)

DELETE FROM "andaz-rkugf" a

WHERE snapshot_timestamp <= '2018-10-31+0'

AND NOT EXISTS (

SELECT FROM keep k

WHERE a.snapshot_timestamp = k.snapshot_timestamp

);

db<>fiddle here

The CTE keep selects the minimum timestamp for every 10-minute interval before the given cut-off.

Notably, timestamps do not have to fall on 10-minute boundaries exactly. This query works with any timestamps.

② extract (epoch FROM snapshot_timestamp) extracts the number of seconds since the epoch. Cast to bigint and divide by 600 (seconds in a 10-minute interval). Integer division truncates, timestamps in the same 10-minute interval fall into the same group. Take min() per group (or whatever you prefer). See:

The main DELETE removes all rows before the cut-off that aren't keepers, pinned down with NOT EXISTS. Related:

For more than a few percent of rows before the cut-off, the PK index is not going to be used at all, a sequential scan will be cheaper. But the PK index is still essential to rule out ties with duplicates.

① '2018-10-31' is a perfectly valid timestamp literal. 00:00:00 will be assumed for the missing time component. Don't cast it to date, that wouldn't do anything useful. Either way, without offset, the time zone setting of the current session is assumed. While operating with timestamptz, it's cleaner to declare the time zone explicitly to avoid sneaky corner-care errors. '2018-10-31 00:00:00+0' or '2018-10-31+0' for short in my example declares UTC time.

Large table

For large tables it should pay to create a temporary table with index instead of the CTE. (Maybe not for deleting a small part of "just" 100k rows.) Like:

CREATE TEMP TABLE keep AS

SELECT min(snapshot_timestamp) AS snapshot_timestamp

FROM "andaz-rkugf"

WHERE snapshot_timestamp <= '2018-10-31'

GROUP BY extract(epoch FROM snapshot_timestamp)::bigint / 600

ORDER BY 1;

CREATE INDEX ON keep (snapshot_timestamp);

ANALYZE keep;

DELETE FROM "andaz-rkugf" a

WHERE snapshot_timestamp <= '2018-10-31'

AND NOT EXISTS (

SELECT FROM keep k

WHERE a.snapshot_timestamp = k.snapshot_timestamp

);

db<>fiddle here

Run all in the same session. (Not necessarily the same transaction.)

Sufficient temp_buffers will keep the temp table in RAM (faster).

For many rows per 10-minute interval

Meaning, the lion share is deleted. It will be faster to just delete all of them and re-insert the few "keepers":

WITH del AS (

DELETE FROM "andaz-rkugf" a

WHERE snapshot_timestamp <= '2018-10-31'

RETURNING *

)

INSERT INTO "andaz-rkugf"

SELECT min(snapshot_timestamp)

FROM del

GROUP BY extract(epoch FROM snapshot_timestamp)::bigint / 600

ORDER BY 1; -- optional

db<>fiddle here