I'm trying to identify an issue I sometime experience on a stored procedure.

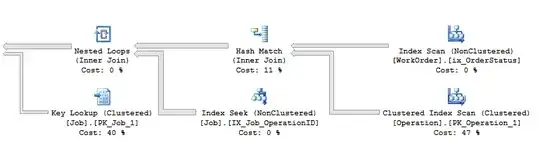

I have to say that the stored procedure uses an UDF in the select clause (perhaps that's the cause of the problem) but anyway, whether it's not, here below a part of the execution plan:

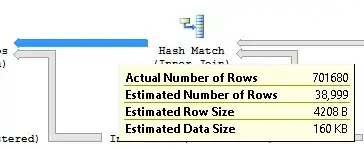

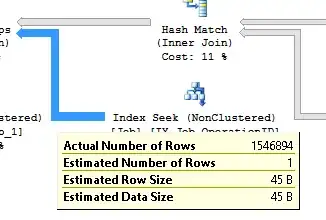

where both the output estimated rows of the Hash Match and the estimated of the Index_Seek on the non clustered index IX_Job_OperationID are completely wrong:

I've tried to update the statistic related to the index IX_Job_OperationID, even with fullscan option, but it didn't help.

UPDATE STATISTICS Job [IX_Job_OperationID] with fullscan

I'm also using the recompile option in the stored because given the parameters provided the dataset involved may change a lot.

Can someone point me to the right direction and help me understand why even though statistics have been updated the Estimated values are so far from Actual?

Here a link to the actual execution plan

This execution plan causes a total of 8,610,665 logical reads, while other better plan that somehow are chosen can be around 122,692