I have a table with roughly 11 million rows, defined as:

CREATE TABLE [sko].[stage_närvaro](

[datum_fakta] [datetime] NULL,

[person_id] nvarchar NULL,

[läsår_fakta] nvarchar NULL,

[termin_fakta] nvarchar NULL,

[period_fakta] nvarchar NULL,

[vecka_fakta] nvarchar NULL,

[veckodag_fakta] nvarchar NULL,

[ämne_id] nvarchar NULL,

[ämne] nvarchar NULL,

[frånvaro_min] [float] NULL,

[närvaro_min] [float] NULL,

[frånvaroorsak_id] nvarchar NULL,

[frånvaroorsak] nvarchar NULL,

[beskrivning] nvarchar NULL,

[personal_id] nvarchar NULL,

[försystem] nvarchar NULL

)

With the following non-clustered index:

CREATE NONCLUSTERED INDEX [stage_skola_närvaro_ix1] ON [sko].[stage_närvaro]

(

[person_id] ASC,

[termin_fakta] ASC,

[läsår_fakta] ASC

)

When I run the following delete query, it takes atleast 2+ hours to complete.

DELETE sko.stage_närvaro

FROM sko.stage_närvaro e

WHERE försystem = 'Extens'

AND EXISTS (

SELECT *

FROM ext.v_imp_närvaro v

WHERE e.person_id = v.person_id

AND e.termin_fakta = v.termin_fakta

AND e.läsår_fakta = v.läsår_fakta

)

Is my delete-query using my index? Would it help to disable the index before deleting, and enable it afterwards?

Edit:

The view ext.v_imp_närvaro has the same amount of rows as the table sko.stage_närvaro.

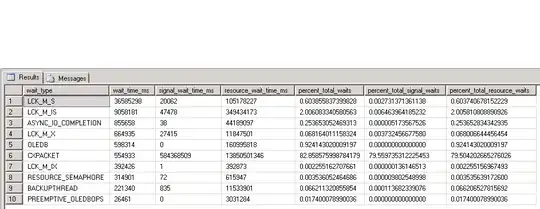

Edit2: I suspected that it was an I/O issue, so I ran the following query as suggested by DaniSQL here:

SELECT TOP 10

wait_type ,

max_wait_time_ms wait_time_ms ,

signal_wait_time_ms ,

wait_time_ms - signal_wait_time_ms AS resource_wait_time_ms ,

100.0 * wait_time_ms / SUM(wait_time_ms) OVER ( ) AS percent_total_waits ,

100.0 * signal_wait_time_ms / SUM(signal_wait_time_ms) OVER ( ) AS percent_total_signal_waits ,

100.0 * ( wait_time_ms - signal_wait_time_ms )

/ SUM(wait_time_ms) OVER ( ) AS percent_total_resource_waits

FROM sys.dm_os_wait_stats

WHERE wait_time_ms > 0 -- remove zero wait_time

AND wait_type NOT IN -- filter out additional irrelevant waits

( 'SLEEP_TASK', 'BROKER_TASK_STOP', 'BROKER_TO_FLUSH', 'SQLTRACE_BUFFER_FLUSH',

'CLR_AUTO_EVENT', 'CLR_MANUAL_EVENT', 'LAZYWRITER_SLEEP', 'SLEEP_SYSTEMTASK',

'SLEEP_BPOOL_FLUSH', 'BROKER_EVENTHANDLER', 'XE_DISPATCHER_WAIT',

'FT_IFTSHC_MUTEX', 'CHECKPOINT_QUEUE', 'FT_IFTS_SCHEDULER_IDLE_WAIT',

'BROKER_TRANSMITTER', 'FT_IFTSHC_MUTEX', 'KSOURCE_WAKEUP',

'LAZYWRITER_SLEEP', 'LOGMGR_QUEUE', 'ONDEMAND_TASK_QUEUE',

'REQUEST_FOR_DEADLOCK_SEARCH', 'XE_TIMER_EVENT', 'BAD_PAGE_PROCESS',

'DBMIRROR_EVENTS_QUEUE', 'BROKER_RECEIVE_WAITFOR',

'PREEMPTIVE_OS_GETPROCADDRESS', 'PREEMPTIVE_OS_AUTHENTICATIONOPS', 'WAITFOR',

'DISPATCHER_QUEUE_SEMAPHORE', 'XE_DISPATCHER_JOIN', 'RESOURCE_QUEUE' )

ORDER BY wait_time_ms DESC

With the following result. I'm not sure how to interpret them though.

v_imp_närvaroand the tables (& their indexes) it uses we can't really tell, but it could be table scanningv_imp_närvarofor rows matchingförsystem = 'Extens'then either scanning the tables used in the view & ordering in tempdb for matching or running the sub-query per row (in either case this could cause a lot of IO). The delete itself might be relatively inexpensive, dependig on how many rows it affects in the end. I suggest you add to the question at least the estimated and actual query plans, and preferably the view/table/index definitions too. – David Spillett Jul 18 '16 at 13:57