curl can only read single web pages files, the bunch of lines you got is actually the directory index (which you also see in your browser if you go to that URL). To use curl and some Unix tools magic to get the files you could use something like

for file in $(curl -s http://www.ime.usp.br/~coelho/mac0122-2013/ep2/esqueleto/ |

grep href |

sed 's/.*href="//' |

sed 's/".*//' |

grep '^[a-zA-Z].*'); do

curl -s -O http://www.ime.usp.br/~coelho/mac0122-2013/ep2/esqueleto/$file

done

which will get all the files into the current directory.

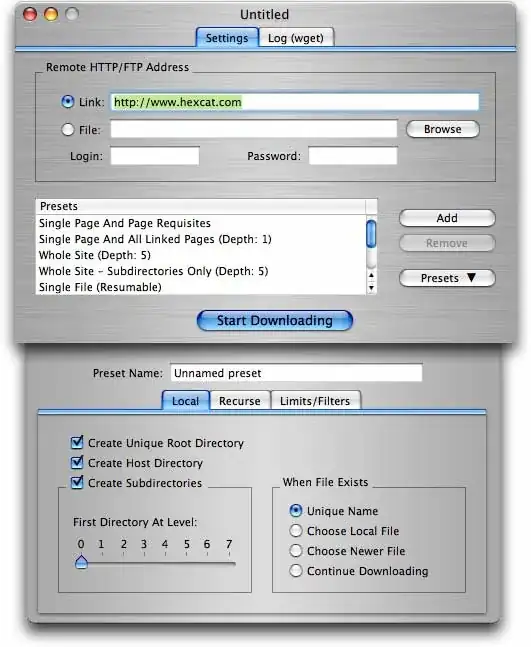

For more elaborated needs (including getting a bunch of files from a site with folders/directories), wget (as proposed in another answer already) is the better option.

wget -r -np -k http://your.website.com/specific/directory. The trick is to use-kto convert the links (images, etc.) for local viewing. – yPhil Dec 11 '14 at 15:44brewandportdoesn't work for me to install wget. What should I do? – Hoseyn Heydari Jan 27 '16 at 15:58-kdoes not always work. E.g., if you have two links pointing to the same file on the webpage you are trying to capture recursively,wgetonly seems to convert link of the first instance but not the second one. – Kun Jan 28 '17 at 19:45/at the end. – khatchad Nov 25 '20 at 17:34index.html. – khatchad Nov 10 '21 at 18:51